by Blogmeister Meisterblogger | Mar 5, 2025 | Entertainment, IMyTH, Interactive Storytelling, Technology

Imagine meeting a character who isn’t just on a screen, but right in front of you—talking, reacting, and bringing an adventure to life in your own space. That’s the magic of iMyth Heroes, where technology and storytelling combine to create interactive experiences...

by Blogmeister Meisterblogger | Sep 5, 2019 | Technology

In a recent web-article on Road to VR: Google hand tracking, I found out about a technology Google has just made available to the public called, BlazePalm, which is part of their MediaPipe, an open source cross-platform framework for developers looking to build...

by Blogmeister Meisterblogger | Aug 21, 2019 | Entertainment, Immersive Theme-worlds, IMyTH, Industry, Interactive Storytelling, Technology

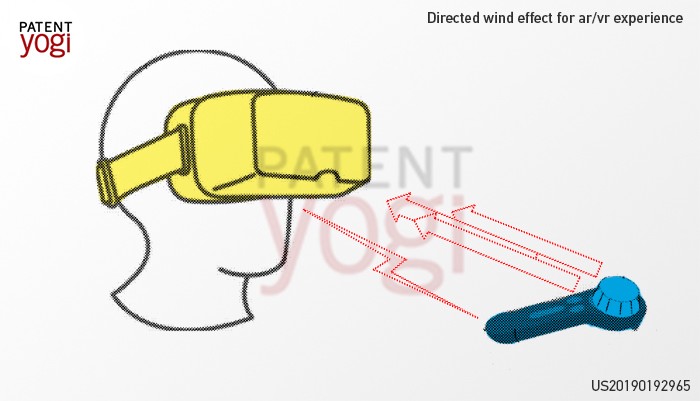

I like to stay abreast with technological developments which not only advance location-based entertainment but interactive storytelling as well. This new announcement from Disney sounds interesting. Disney research just filed a patent for a new head mounted...

by Blogmeister Meisterblogger | Jul 26, 2018 | Technology

A quick little update of the evolution of Steam VR 2.0 Tracking. A VR Arcade proprietor, Tower Tag, successfully pulled off a large multi-player experience involving 6 players using 4 new Steam VR Base stations. SteamVR 2.0 Test. Six players in one space tracked by 4...

by Blogmeister Meisterblogger | Jul 11, 2018 | Technology

One of the Holy Grails to rise above the first generation of VR is to move from room-scale tracking to warehouse or house scale tracking. During the The Courier prototyping phase, the iMyth crew was able to extend the Steam VR Tracking to 20 feet by 20 feet. There...

by Blogmeister Meisterblogger | Jun 1, 2018 | Technology

The University of Stony Brook, Nvidia and Adobe are presenting at Siggraph 2018 with their paper on infinite walking using Dynamic Saccadic Redirection. This is a really neat interpretation of the age old problem of redirected walking. The last “great”...