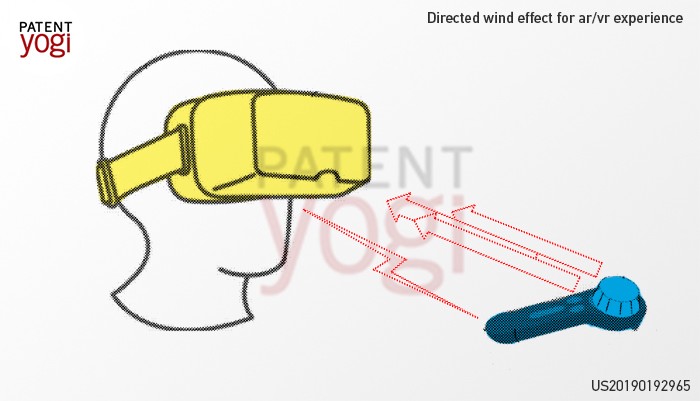

I like to stay abreast with technological developments which not only advance location-based entertainment but interactive storytelling as well. This new announcement from Disney sounds interesting. Disney research just filed a patent for a new head mounted display(HMD) and a gizmo which is referred to as an “Air Flow Generator”. I have no information about the HMD but the air-flow generator sounds interesting.

This air-flow generator evidently generates gusts of directed airfields which can be used to simulate the haptic sensation of the movement of a virtual object such as sword swinging, animal moving etc. In addition, the generator can also manipulate the smell of the generated air gusts to simulate particular smells such as the smell of soil, smell of flower, etc.

For sure there are many other air field generators, (fans), which can be actuated by trigger events within an experience. However, these have always been very “low frequency, high amplitude”, for lack of better terms, sensations. This generator sounds very localized and directed. There have also been other manufacturers of HMD attachments promising custom generated scents. This gizmo, as an external generator, promotes more of a collaborative, shared experience. The Void produces similar sensations in their experiences. I am unfamiliar with their technology.

In a conversation with an Imagineer many years ago, I think Disney is on to a product which can really contribute to large scale experiences. He told me exactly how such a gizmo exactly like this could be implemented. Maybe he decided to have Disney research actually implement it?

If this product is what I think it is then I believe it could make a significant contribution for the location-based entertainment market. An apparatus such as this could not be marketed for home use. If location-based immersive experiences are to be bigger, bolder and more fantastic than home based experiences, then this technology could aid in widening the gap between home and location-based. Of course there is a huge dependency on how reconfigurable this device is. However, if it fully reconfigurable and “dynamic”, then it will contribute to an experience that is physical, collaborative and highly memorable, (Always unique) – Just what the iMyth team ordered.