by Blogmeister Meisterblogger | Aug 21, 2019 | Entertainment, Immersive Theme-worlds, IMyTH, Industry, Interactive Storytelling, Technology

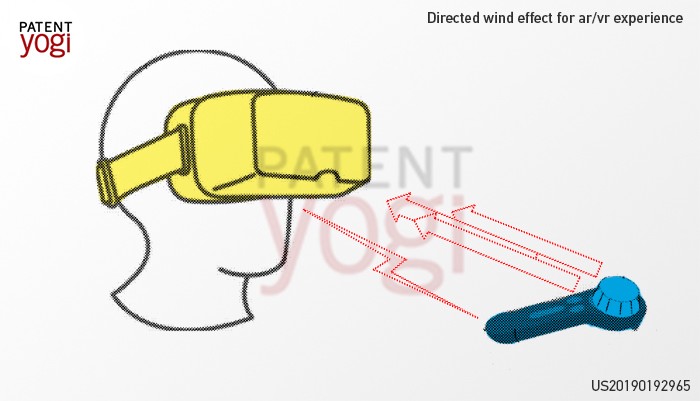

I like to stay abreast with technological developments which not only advance location-based entertainment but interactive storytelling as well. This new announcement from Disney sounds interesting. Disney research just filed a patent for a new head mounted...

by Blogmeister Meisterblogger | Aug 2, 2017 | Technology

It has been a long me coming in order to create real-time, digital humans. Although not quite there, a very talented group just put together the best attempt yet: Mike Seymour, co-founder and interviewer for FXGuide, teamed up with companies such as Epic Games, Cubic...