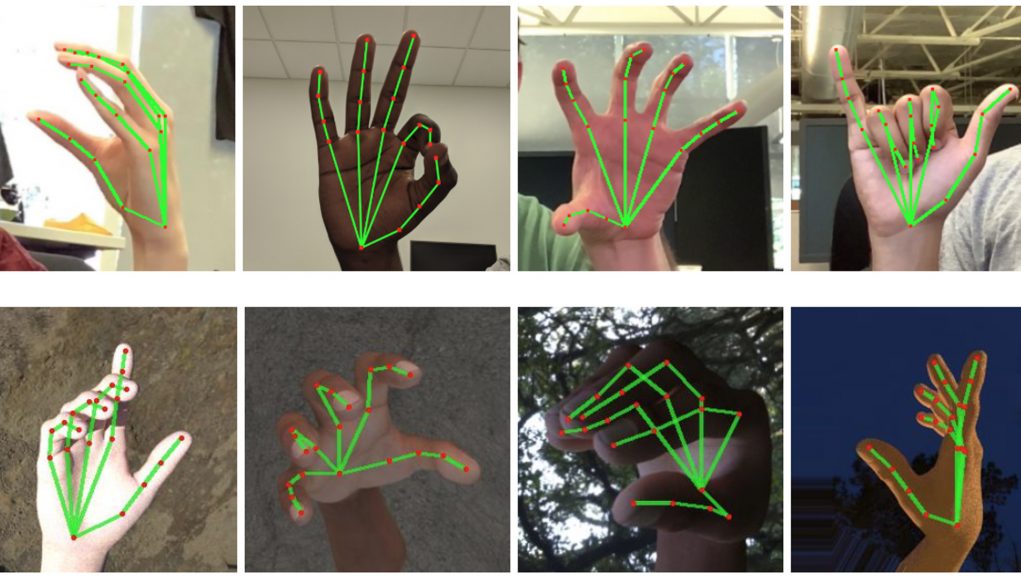

In a recent web-article on Road to VR: Google hand tracking, I found out about a technology Google has just made available to the public called, BlazePalm, which is part of their MediaPipe, an open source cross-platform framework for developers looking to build processing pipelines to handle perceptual data, like video and audio. BlazePalm is a new approach to hand perception which provides high-fidelity hand and finger tracking via machine learning, which can infer 21 3D ‘keypoints’ of a hand from just a single frame.

Google Research engineers Valentin Bazarevsky and Fan Zhang describe their work in their full blogpost . While I have not read the full blogpost myself, Bazarevsky and Zhang describe, “Whereas current state-of-the-art approaches rely primarily on powerful desktop environments for inference, our method achieves real-time performance on a mobile phone, and even scales to multiple hands.” A few of the major points from the blogpost are:

- The BlazePalm technique is touted to achieve an average precision of 95.7{76c5cb8798b4dc9652375d1c19c86d53c1d1411f4e030dd406aa284e63c21817} in palm detection, researchers claim.

- The model learns a consistent internal hand pose representation and is robust even to partially visible hands and self-occlusions.

- The existing pipeline supports counting gestures from multiple cultures, e.g. American, European, and Chinese, and various hand signs including “Thumb up”, closed fist, “OK”, “Rock”, and “Spiderman”.

- Google is open sourcing its hand tracking and gesture recognition pipeline in the MediaPipe framework, accompanied with the relevant end-to-end usage scenario and source code, here.

While this is an interesting technology geared towards the mobile community, I feel it has far greater impact into the AR/VR/MR communities as well. First, this looks like some pretty decent hand recognition technology being made available to the entire community, free of charge. While this may have deep financial impact to companies such as Leap Motion, I feel it democratizes the hand recognition world. While I will need to reserve judgement until I see the technology in action but this may have even better performance than Leap Motion and without the purchase of expensive external hardware.

Being able to implement hand tracking into a VR experience today was only an expensive pipe dream. But now, with a bit of time and ingenuity, this technology can be brought to real-time VR without expensive hardware and software. As the researchers continue to work on refining and tightening their technology, I can’t help but wonder if other tracking barriers, such as facial tracking, are also in the verge of crumbling!